The Right Half of the Curve: Why Late AI Adoption Threatens Your Team

Dave Graham

Principal Consultant

January 8, 2026

The Loop That Builds Itself

The AI agentic coding community has been abuzz about the "Ralph Wiggum loop" lately:

while :; do cat PROMPT.md | claude-code ; done

Same prompt, fed repeatedly. The agent sees its own previous work in the files, iterates, improves, ships.

Geoffrey Huntley announced this technique in summer 2025 as a simple bash while loop. By the end of 2025, it went viral. Now it's an official plugin in Claude Code's marketplace that adds structured completion detection:

/ralph-loop "Build a REST API with CRUD operations and tests.

Output <promise>COMPLETE</promise> when done." --max-iterations 50

The name comes from The Simpsons character Ralph Wiggum, whose dim-witted yet optimistic perseverance lets him succeed despite setbacks. Huntley's key insight: the failures are "deterministically bad in an undeterministic world." Predictable failures mean you can systematically improve through prompt tuning.

The results speak for themselves. Huntley ran a 3-month loop that built Cursed, a programming language (think Go, but with Gen Z slang as keywords 💀):

yeet "vibez"

slay main_character() {

sus i normie = 1

bestie i <= 100 {

ready i % 15 == 0 {

vibez.spill("FizzBuzz")

} otherwise ready i % 3 == 0 {

vibez.spill("Fizz")

} otherwise ready i % 5 == 0 {

vibez.spill("Buzz")

} otherwise {

vibez.spill(i)

}

i = i + 1

}

}

YC hackathon teams have shipped 6+ repos overnight using the technique. One engineer completed what would have been a $50k contract for $297 in API costs.

The Automation Conversation We Keep Having Wrong

Two narratives dominate the discourse. The doom version says AI will replace developers and eventually every knowledge worker. The cope version insists AI can't do real engineering, that it just autocompletes, or worse builds buggy spaghetti code that leaves mountains of illegible technical debt.

Both miss the point.

AI isn't replacing developers wholesale. Instead, AI is dramatically amplifying what one developer can do. Individual productivity is compounding fast.

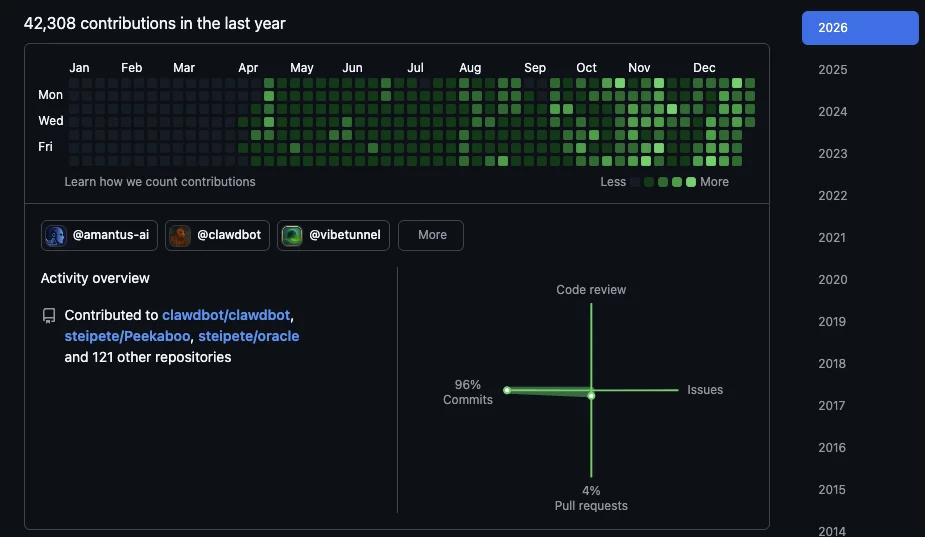

Peter Steinberger's GitHub heatmap. 42,000+ contributions in a year. This is what AI-native development looks like.

Peter Steinberger's GitHub heatmap. 42,000+ contributions in a year. This is what AI-native development looks like.

Consider the math. A team of 5 now ships what a team of 10 shipped two years ago. One senior dev with AI ships what a senior plus two juniors used to. The "10x developer" meme is becoming literal, but only for those who've adapted.

Spreadsheets offer a useful historical parallel. They didn't eliminate accountants. They eliminated some accountants and transformed what the rest did. Those who adapted became more valuable. Those who didn't became redundant.

What happens when half your industry is 3x more productive than the other half?

How This Shows Up in Engineering Orgs

The Ralph Wiggum loop represents just one pattern. Others are emerging:

| Pattern | What It Does | Implication |

|---|---|---|

| Ralph loops | Iterative task completion | Less babysitting, more delegation |

| Multi-agent orchestration | Parallel workstreams | One person, multiple "teams" |

| Agentic debugging | Self-correcting code | Faster iteration cycles |

| Spec-to-ship pipelines | Requirements → deployed code | Faster time to production |

For engineering organizations, the implications cut deep. Junior dev work faces the highest exposure: boilerplate, CRUD operations, test writing. Senior work shifts toward architecture, judgment, and prompt engineering. The "glue" work (code review, mentoring, coordination) becomes more valuable, not less.

One uncomfortable question looms over hiring plans. If one senior dev plus AI can do what a team of four did before, what happens next?

The answer depends entirely on where you sit on the adoption curve.

The Right Half of the Curve

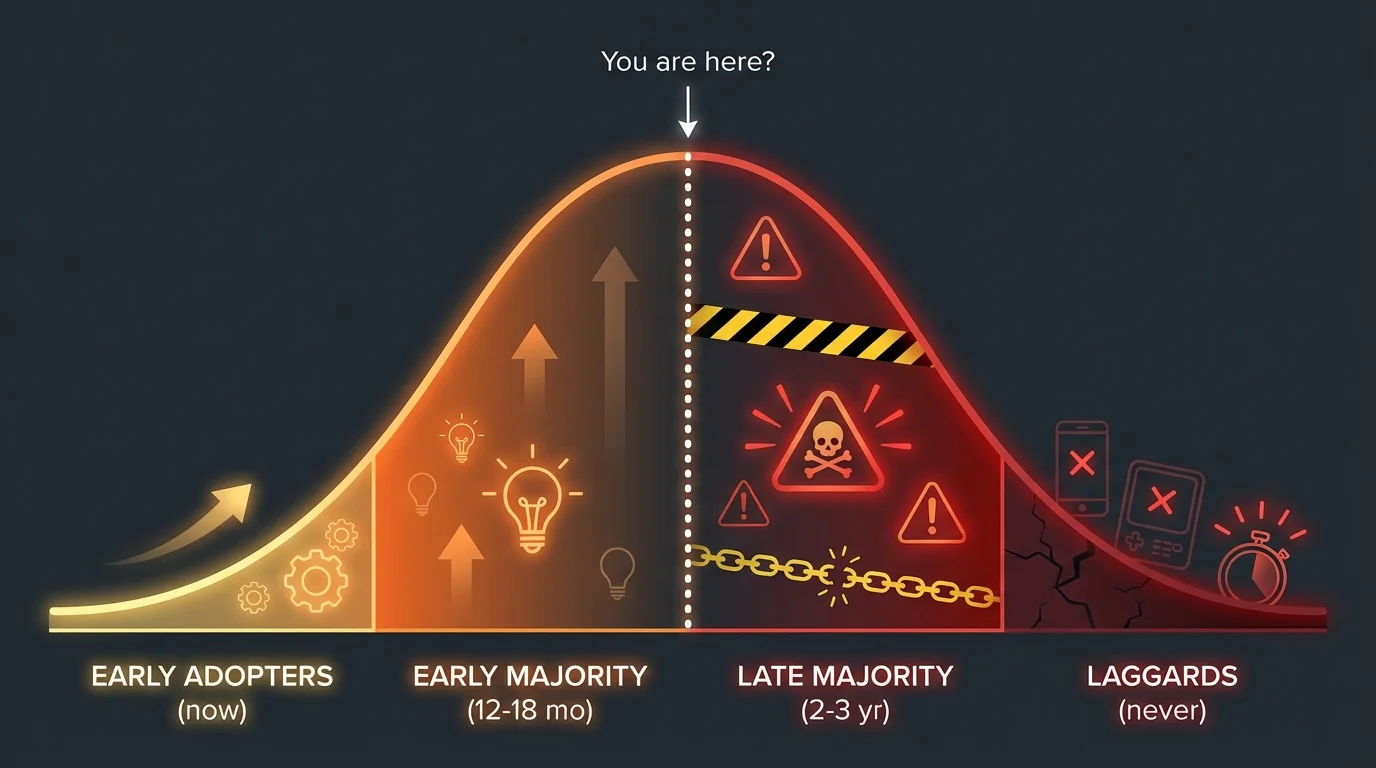

The adoption curve creates a stark divide:

Organizations on the left (early adopters) are already shipping with AI. Their productivity gains compound. They're defining what "normal" looks like for everyone else.

Organizations on the right (late majority and laggards) face a different reality. They're competing against teams 2-3x more productive. Their cost structures look increasingly bloated. They're hiring for roles that won't exist in 18 months.

The delta between these groups is the threat.

When your competitor ships in 2 weeks what takes you 2 months, you don't get to blame AI. You get to blame the 18 months you spent "evaluating" while they were adopting.

Who's actually at risk? Developers who haven't adapted. Junior engineers at organizations that don't reskill them. Entire teams at organizations that react instead of prepare.

The compounding problem makes this worse over time. Early adopters get better at using AI faster through practice effects. Late adopters face steeper learning curves plus competitive pressure simultaneously. The gap widens.

The Change Management Opportunity

How you manage this transition matters more than which tools you pick.

Organizations that treat AI adoption as "buy tools, hope for productivity" will shed headcount reactively when competitors outpace them. They'll lose institutional knowledge through layoffs and face culture damage from fear and uncertainty.

Organizations that treat AI adoption as a people transformation will get different results. They'll reskill existing talent before the pressure hits. They'll redesign roles and business processes around human-AI collaboration. They'll build competitive advantage through workforce capability.

The playbook breaks into four phases:

Assess the exposure (Now): Which roles have the highest AI augmentation potential? Where are your people on the adoption curve? What's your 18-month competitive exposure?

Build AI fluency (0-6 months): Focus on building intuition for human-AI collaboration, not just tool training. Make pair programming with AI standard practice. Create safe experimentation environments outside production pressure. Reward the experimentation itself.

Redesign roles (6-12 months): Transform junior roles into AI-augmented acceleration paths. Shift senior roles toward architecture, judgment, and mentoring. Create new roles around prompt engineering, AI ops, and human-AI coordination.

Restructure gradually (12-18 months): Use attrition for right-sizing rather than layoffs. Redeploy productivity gains to new initiatives rather than headcount cuts. Build a culture of continuous adaptation.

The alternative means waiting until competitors force the issue. Layoffs under pressure. Lost talent, damaged culture, playing catch-up from behind.

The pitch to leadership writes itself: "We can do this proactively over 18 months, or reactively in 3 months when the market forces it. One preserves our team and culture. One doesn't."

The Window Is Now

I don't think the Ralph Wiggum loop is the end state of our new ways of working. I know the way we've been working is about to change.

Your organization will adopt AI-augmented labor. The only variable is whether it happens on your terms or your competitors'.

Eighteen months from now, the early adopters will be hiring from the laggards.

If you're a leader seeing this shift and wondering how to prepare your organization, let's talk. We help teams assess their AI exposure, build adoption roadmaps, and design change management strategies that keep people while transforming capabilities.